Sam Gutsell, Shaquil Sidiki and James Greeley (aka “Three Blind Mice”) at the APL Moot at the YHA in the Lee Valley

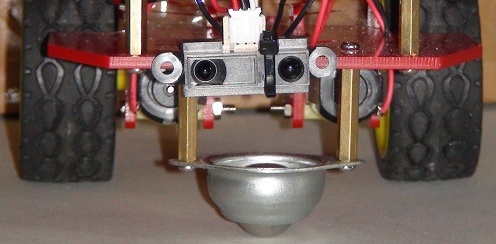

This weekend, the Dyalog C3Pi reached the final stop on the European spring tour, attending the British APL Association’s Annual General Meeting and “Moot” just north of London, where the robot met the famous mice from Optima. The day before, the C3Pi also travelled to an OSHUG meeting in London on Thursday, where Romilly Cocking was talking about quick2link. Alas, poor C3Pi was confined to a cardboard box due to problems with wiring up its new “eyes”:

Asimov’s third law of robotics states that a robot must protect its own existence. Thanks to the addition of a SHARP infrared distance measuring sensor, our robot is now capable of at least not running head first into walls or other obstructions (and if the obstruction is a spectator, we’re also providing some support for the first law)!

Connecting the Sensor to the Raspberry Pi

The sensor is attached to an analog input pin on the Arduino (we picked pin #0). Our Arduino command interpreter, which allows APL on the Rasperry Pi to use the Arduino as a “controller” for analog and digital I/O, was extended with an “Analog Read” command consisting of the letter “a” followed by a byte giving the pin number and a dummy pad byte in order to ensure that the command length is 3 bytes (the fixed length simplifies the interpreter). Thus, if APL transmits (97 0 0) to address 4 on the I2C bus, and then issues an I2C read command, it will receive a string containing the current voltage (up to 5v, in 1024ths) measured – for example “a0:480;” if the input is 2v. We elected to include a confirmation of the pin number in the result, and separators which will allow us to send several sensor input values in a single string, as we add more sensors to the robot.

In the APLBot GitHub Repository, The DyaBot class has been extended to run a background thread which updates the value of a new property called “IRange”, every 100ms (a public method UpdateIRange can be called at any time to refresh the value). The input voltage is converted to a distance in centimetres, using the tables from the sensor datasheet. The next blog post will illustrate some of the data that we are now able to collect from the sensor.

The DrivePi game code also runs a background thread which monitors the value of IRange, and stops the wheels if the measured distance drops below 20cm – but this code still needs some testing and tweaking.

Accelerometer and Gyro on the Way

My experience to date is that controlling the robot is a little bit tricky, because the output of the wheels seems quite variable (changes from day to day, and from hour to hour). Determining the position of the robot using “dead reckoning” based on the voltage applied to the wheels seems unlikely to succeed. We either need to find some more reliable wheels – or find some sensors that can help us understand what is going on.

I have been able to lay my hands on an MPU-6050 motion tracking device from InvenSense. We’ll be trying to wire this up over the next couple of weeks and see whether it allows us to accurately track the motion of the robot. We will also soon take delivery of an ultra-sonic sonar mounted on a servo, so we can start measuring longer distances accurately (the IR sensor is really only suitable for collision avoidance). Once these are wired up, all we have to do is write “a little” APL code to do the localization and mapping!

Follow

Follow