Grid Computing with Dyalog

by Risto Saikko, CEO Techila Technologies

The following article is based on the presentation given by Techila at Dyalog '07 – Dyalog's world wide user conference – in Princeton, New Jersey on October 1st 2007.

The article describes how computational tasks can run in minutes instead of hours in a virtual supercomputer.

The lack of Computing Power

In today's business environment, research and product development projects rely heavily on computation in its many forms i.e. for task such as optimization, modelling, simulations, or data analysis. The frequent problem many companies face is the profound lack of sufficient computational capacity. The very nature of technical computing is to continuously address the next most difficult problem. Businesses who needs to solve heavy computational tasks can easily identify a number of problems that would require one to three orders of magnitude (10x to 1000x) more computing power than what they currently have available. They may be able to solve these problems using existing capacity, but the offset is that instead of hours, these problems may require days or even weeks to solve. A solution, which is rarely neither possible nor practical.

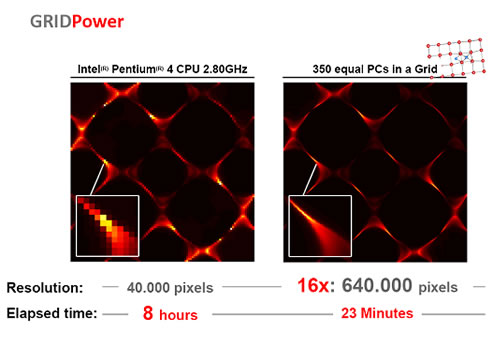

An example of a common customer problem is a simulation task which requires 5 days and 8 hours (128 hours) to run. Even the simplified version of this simulation task takes 8 hours to run. The customer obviously needs the results today, preferably as soon as possible.

The picture above shows how the computation task – which previously took 8 hours - was run in 23 minutes with 16 times better accuracy. Or put in other words – the performance improvement factor was 340! This means that by employing the grid technology the customer was able to run the original full-scale task in 23 minutes.

Examples of potential customer problems in different industry sectors

Financial services – Portfolio modelling, stochastic valuation reporting, risk analysis and asset liability management – speed up analysis and improve critical decision making.

Oil & Gas & Mining Industries – Reservoir modelling, 2D & 3D seismic processing, and horizontal drilling benefit – increase project scope and improve accuracy of data analysis.

Industrial engineering – Supply chain, logistics, and plant and process modelling, simulation and optimization – achieving dramatically higher return on capital.

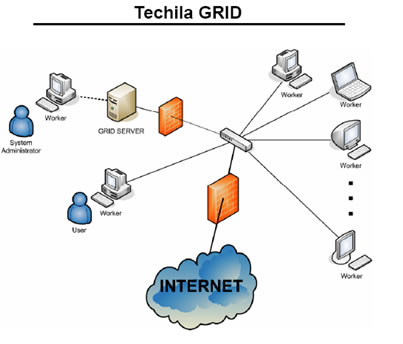

Techila creates a supercomputer from your existing PCs

It is evident that in normal office use the full capacity of a PC is not well utilized. Only approximately 5% of the processor capacity is in use. The remaining 95 % is mostly used for generating heat – which admittedly is very nice on a cold winter day. Techila GRID harnesses the unused 95% of the PC capacity to create instant positive bottom line impact. The philosophy behind the technology in Techila's Grid solution is to distribute computation task to PCs which subsequently run the computation as a background task. The normal use of the PC is not disturbed, and the capacity that the user of the PC doesn't need is used for something rather more efficient than heat generation.

Why does it work so well?

In Techila's solution the intelligence is distributed. As the Clients are also intelligent they reduce the management tasks needed from the server. This helps to drastically minimize network traffic, provides a huge advantage on the scalability and leads to Autonomic Management which means:

- Self-healing – discovering, diagnosing, and preventing disruptions.

- Self-configuration – modifying interactions and behaviours based on the changes in the environment.

- Self-optimisation – tuning resource usage and improving workload balancing.

- Self-protection – detecting, identifying and protecting against failure and security attacks.

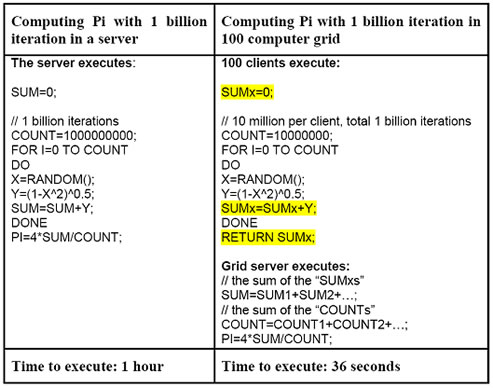

Gridifying a computational problem

One of the main advantages in grid computing is that there is no need to do any rewriting of the code. The existing programs can be modified to operate as distributable executed programs with slight changes – if any at all. Usually this means adding some parameters to the programs execution interface, and possibly modifying some of the program code changing variables to the parameters as well. The added parameters describe how the problem is divided into the grid and which parts of the problem each of the clients will solve. The variables changed into the parameters usually describes the problem itself, or they may define how detailed the solution should be. The amount of the parameters is depending on the nature of the problem and they are defined independently for each individual case scenario. Below you will find an illustration of what this means on code level. The example represents a Monte Carlo simulation commonly used for example in the Finance sector.

As the modified program gets on, a simple, generic Java code runs alongside it. This will supply all the needed parameters to the native program and will further collect the results from the program. The modified programs and the Java code are packed into modules. The rarely changing and/or static data are stored in modules as well. The modules are stored on the server, and they are transferred to the clients when needed. Usually this means that a module is transferred the first time a client is about to compute the problem. Modules already transferred to the clients will not be re-transferred unless they are changed and/or upgraded. The server side will also be equipped with a dedicated simple module which contains instructions with regards to how the problem is distributed and which parameters are required to solve the problem.

What types of computational problems can be Gridified?

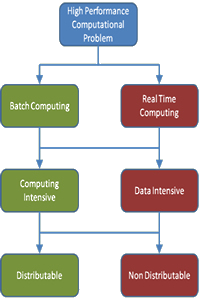

The following illustration shows how a high performance computational problem can be analyzed by studying the behaviour of the problem itself.

Firstly, a problem is either a batch process or a real time process. Computation done in a flight simulator is a good example of high performance real time computing.

The second layer divides the problem into either computing intensive or data intensive categories. A computing intensive task is, for example, the earlier described MonteCarlo simulation of Pi (π). Whereas data analysis of, for example, an insurance data base is a heavily data intensive process.

The third layer divides the computational problem into distributable and non-distributable tasks. If the algorithm in the problem cannot be parallelized or the parallelized parts need to communicate among themselves, the problem is difficult to gridify. In this case it may be possible to gridify the problem by parallelizing the input data and the result pairs but not parallelizing the algorithm. This means executing the algorithm with several different input data values each in the grid client – like in many optimization cases.

Co-Operation with Dyalog

At the moment the examples given are gridified at the application level. We are currently looking for software products made with Dyalog APL in order to find out if it is feasible to gridify them. Our goal is to gridify at APL interpreter level and we are looking for seamless integration with Dyalog.

If you would like to know more about Techila's Grid technology and how it could potentially apply to our software application please contact Risto Saikko.