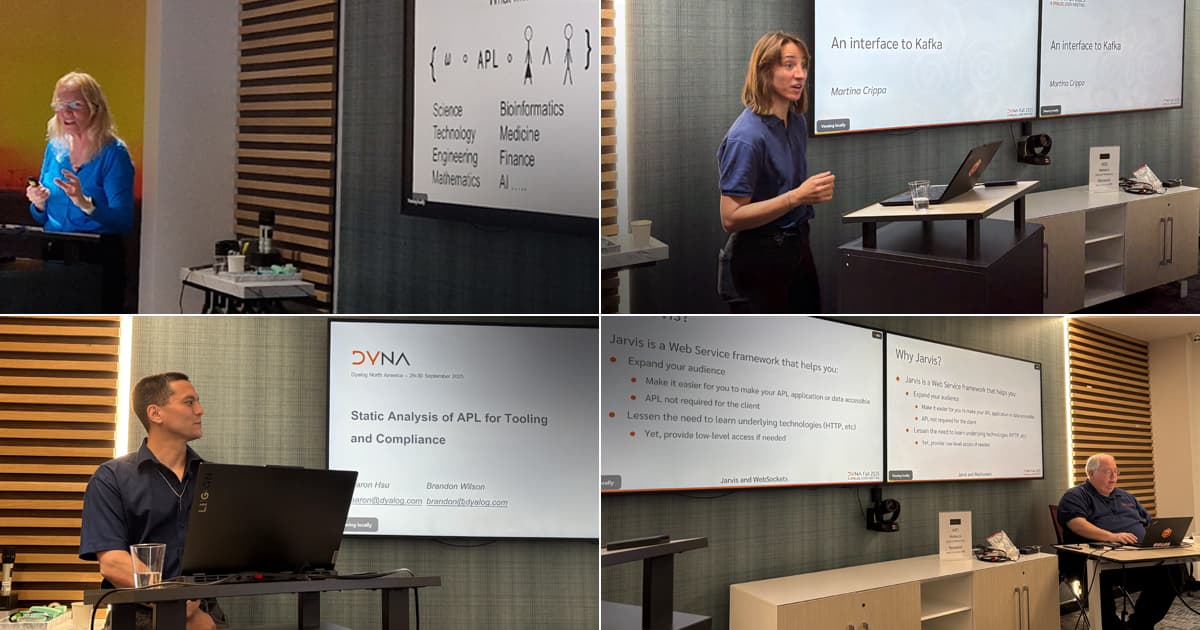

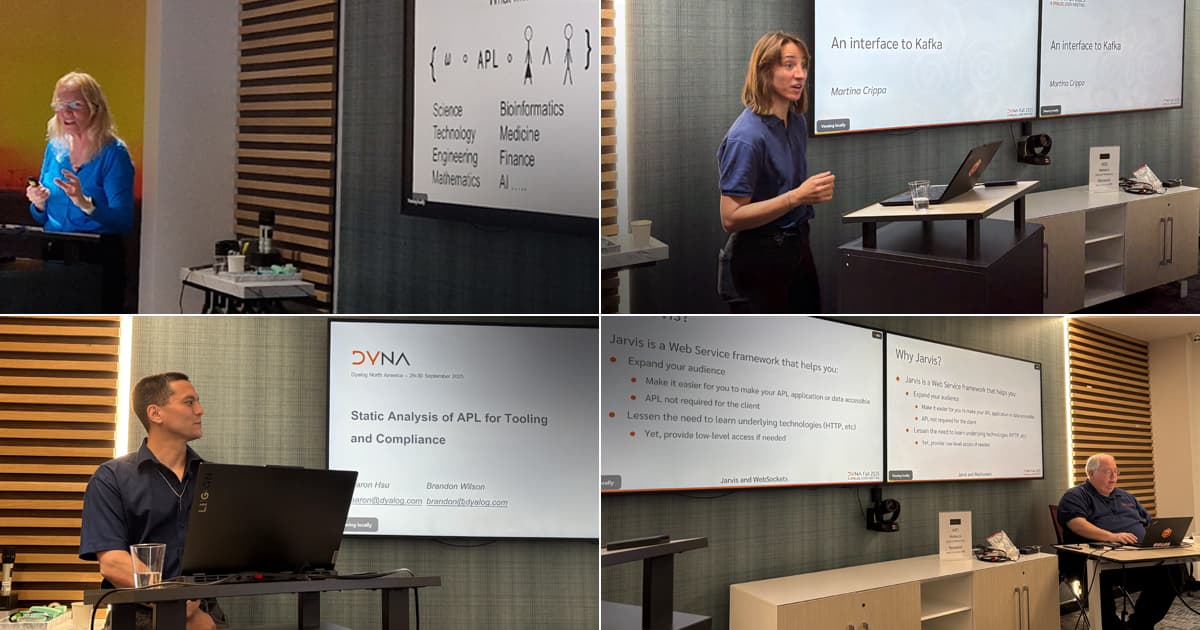

DYNA Fall 2025 brought together APL enthusiasts, customers, and Dyalog Ltd staff for two days of presentations and workshops in New York City. Attendance was strong, energy was high, and the sessions showcased both the maturity and momentum of the Dyalog ecosystem.

The event took place in the Jay Suites Conference Center at the end of September. We’d arranged a bigger venue this time, but it was still filled to capacity! There were eight members of Team Dyalog present, and the European contingent gathered at Brian Becker’s house for a few days beforehand for a “Conclave”. As we’re a geographically-distributed team, we try to take the opportunities that present themselves to work together in person – our thanks to Brian for hosting.

Day 1: Presentations

The Dyalog Road Map – Fall 2025 Edition

Morten Kromberg

First impressions: so many people! Old friends, new acquaintances, and a palpable buzz in the air. Our CTO, Morten, started proceedings with his customary roadmap and vision – what have we done, what are we doing now, and where are we going next. He highlighted both the necessary Sisyphean work, such as the ongoing improvements and interfaces to other systems, and the more exciting developments, such as new language features (array and namespace notation, ⎕VSET, ⎕VGET, and inline tracing). Morten emphasised our growth, in terms of both revenue and staff. He introduced our newest hires, including Asher Harvey-Smith, whose first day happened to be today!

Dyalog and AI

Stefan Kruger

Following Morten’s presentation, Stefan talked about Dyalog and AI. AI is a hot topic that impacts most companies in some way, but, as a minority language, LLM performance has been poor in APL. Stefan’s talk covered some of the recent developments in the field, highlighting the fact that frontier models today are capable of explaining even quite complex APL code correctly, but that they still struggle to write code unaided. He demonstrated an LLM agent capable of executing code, running tests, and reading documentation – showing that while this improved performance, we’re far off the productivity improvements that a Python developer could expect.

JAWS – Jarvis And WebSockets

Brian Becker

Brian took over to present JAWS. No, not the 70s movie, but the WebSocket extension to Jarvis: JAWS = Jarvis And Web Sockets (Jarvis is our web service framework). He outlined the different use cases for HTTP, and contrasted those against the use cases for which you need something else, and how websockets can fill that function. Brian showed some practical examples on how websockets are used, and how the websocket functionality slots neatly into Jarvis.

A Dyalog Interface to Kafka

Martina Crippa

Martina introduced us to Apache Kafka, an event-streaming platform widely used as a backbone in the data infrastructure in large organisations, such as banks. Large cloud providers often use Kafka as the glue between their services. Martina has been working on a Dyalog interface to Kafka. She explained why it matters, and demonstrated the APL Kafka API live. The Dyalog Kafka interface is being built primarily in response to customer requests, but it’s fully open source and we will be offering optional paid support packages for those that so wish.

Static Analysis of APL for Tooling and Compliance

Aaron Hsu and Brandon Wilson

The morning finished with Aaron and Brandon talking about the Static Analysis project. Static Analysis is becoming increasingly important, especially in regulated industries, where compliance demands for such checks percolate through all the way down to platform vendors. They demonstrated the principles upon which the Dyalog Static Analyser is being built: the parser from the Co-Dfns compiler, which has been extended to handle the whole of Dyalog APL. A clear visualisation of how the static analyser’s rules select features from the analysed code showcased the powerful potential that this approach promises. Presenting as a double-act performance is hard to get right, but Aaron’s and Brandon’s presentation went down well. Static analysis is challenging at the best of times, but demand is particularly acute in finance, where regulatory compliance drives tooling requirements.

Lessons Learned when Converting from APL+Win to Dyalog APL

Alex Holtzapple of Metsim International (MSI)

METSIM® is an all-in-one solution for mining and metallurgical operations; it is used in 57(!) different countries around the world – “in the remotest corners of the map”, as Alex put it. Over the past 18 months, Alex and MSI have worked closely with us to migrate METSIM® from APL+Win to Dyalog. After introducing himself and METSIM®, Alex described the process of working with Dyalog Ltd. He had a clear vision of what he wanted to achieve: he specifically wanted to preserve the UI’s design as it stands, because that places less of a burden on customers to modify their workflows and established processes. The migration has been a successful project, and the Dyalog-based product is now in the hands of their customers. Both MSI and Dyalog Ltd learned important lessons from the migration project. Alex said that they’d thought about this migration project for a long time, but what finally swung the decision was a visit to our HQ in Bramley, UK, to “look the team in the eye”.

Dyalog APL: Our (Not So) Secret Ingredient

Mark Wolfson of BIG

Mark Wolfson told us how they’re disrupting the jewellery business, and how Dyalog is forming a central component in this. BIG’s stack is a great example of a modern, heterogeneous services architecture: by the very nature of the business, they need to be able to consume data from a multitude of diverse systems and protocols. After this data has been transformed into a common format, it is then consumed by several internal systems to “derive insight from chaos”. BIG has always been an APL promoter, and Mark is doubling down on this. BIG is increasingly reliant on APL, and they’re investing significantly in their capabilities.

The Data Science Journey

Josh David

Josh talked us through Dyalog’s data science journey. Dyalog APL has long been a natural fit for data analysis, long before the term ‘Data Science’ became fashionable. Today, the field is – like so many others – dominated by Python and R. At Dyalog Ltd, we firmly believe that we have a role to play in this space, and we’re actively trying to attract new practitioners. Josh recounted his experience exhibiting at the 2025 Joint Statistical Meetings (JSM), Nashville, together with Martina Crippa, Rich Park, and Steve Mansour. There was a lot of interest from delegates, especially when walked through the extremely compact formulation of the k-means clustering algorithm in APL. A renewed focus on the Data Science application of Dyalog APL will inevitably impact our development roadmap – we need to improve both our data ingest story, and the raw performance in some key areas.

Josh also showcased Steve Mansour’s statistics package TamStat. TamStat is primarily intended as a package for teaching statistics, but it has several other interesting facets, too: it can be used as a library for statistics routines that you can use in your own applications (it’s open source), and also as a “statistics DSL” – a compact, dedicated way to express and evaluate statistics formulae.

What Can Vectorised Trees Do For You?

Asher Harvey-Smith

Asher is the newest member of Team Dyalog, and he has started his role by giving a presentation and hosting a workshop on the first two days of his employment! However, his association with Dyalog Ltd goes back longer than that, as he has previously completed two internships (the second as “senior intern”), and is already a seasoned presenter (he stepped up to the podium at the Dyalog ’24 user meeting in Glasgow). Asher wants to popularise the tree manipulation techniques used in the Co-Dfns compiler and also in the static analyser. Through a set of clear examples and animations, Asher has found a great pedagogical treatment of a set of techniques that many people have had difficulties grappling with. Asher also outlined when the “parent vector” technique is not appropriate.

ArrayLab: Building a 3D APL Game with raylibAPL

Holden Hoover, University of Waterloo

Holden Hoover, the inaugural APL Forge winner in 2024, demonstrated a 3D game called ArrayLab that he’s been building on top of Brian Ellingsgaard’s raylibAPL (Brian is also a former summer intern at Dyalog Ltd). One purpose for developing the ArrayLab game was to test the raylibAPL bindings whilst simultaneously exercising the Dyalog interpreter. When working with native extensions there are a lot of things that can go wrong! Holden also had to learn a lot about game development, in particular in-game physics. The ArrayLab game is a work in progress, but he showed a live walk-through of his progress so far, demonstrating correct physics and collision detection.

The APL Trust US

Diane Hymas and Mark Wolfson, The APL Trust US

The first day concluded with Diane Hymas and Mark Wolfson reporting on the progress they, together with a wider team, have been able to make on the APL Trust US. The purpose of the APL trust is to “give back” to the community. If you have an idea for something that you want to do with APL, you can apply for funding help from the APL Trust – the application process is being formalised at the moment. The good news from Diane was that the APL Trust is now launched as a registered tax-deductible charity.

Socialising!

An important part of multi-day gatherings of this kind is the impromptu hallway encounters – networking opportunities where like-minded people meet and learn from one another. After the presentations had completed, we retreated to the Yard House restaurant a few blocks up the road for dinner, conversation, and making friends. For several of us, this was our first visit to New York, and taking in the sights and sounds of Times Square at night is definitely an experience!

Day 2: Workshops

Tuesday was workshop day. We had a packed programme across two streams. In the morning, you could choose between learning how to use Jarvis with Brian and Stefan, or a deep-dive into namespaces with Josh, Morten, and Martina.

We have found that the namespace aspect of APL is frequently misunderstood, and we’ve run the “Introduction to Namespaces” workshop a few times now. Josh was managing a full house, with Morten and Martina assisting. In the room next door, Brian gave a hands-on, practical introduction to Jarvis and JAWS, with detailed explanations of the different use cases. Jarvis is already a core component in many users’ deployed Dyalog applications; with the introduction of web socket support, Jarvis/JAWS will find its way into more application deployments.

In the afternoon you could choose between learning how to use Link with instruction from Morten and Stefan, or an introduction to the key operator with Asher, who also attracted a full house.

Key is one of the advanced operators in Dyalog APL, and mastery unlocks a lot of applications, especially in the Data Science domain. Simplifying hugely, the science goes to the left and the data goes to the right! Asher had a job on his hands teaching such a large group with a range of abilities, but he was ably assisted by Martina and Josh. Next door, Morten was helping the group through gradually more complex source code scenarios with Link. Link is now into its fourth major version, and a mature workflow component that lets Dyalog users take advantage of a range of external tools that are expecting to operate on text files, such as Git, GitHub, VS Code, and many others.

In Conclusion…

The 2025 Fall DYNA conference was a well-attended, well-received event, with a great mix of newcomers, veterans, customers, and members of Team Dyalog. The highlights for us were the two customer presentations from Alex Holtzapple and Mark Wolfson – it is always interesting for us to see how people use our product in the field! Alex is new to Dyalog, and it was fantastic to hear him reporting such a positive experience and outcome. Although Mark has been a Dyalog user for longer, he was no less enthusiastic, and to hear that they’re really growing their APL development team is a vote of confidence. It was also great to hear how The APL Trust US is taking off.

DYNA Fall 2025 reflected our growing momentum, both in technology and community. Each talk underscored a shared commitment to pushing APL forward, not just as a language, but as a living ecosystem shaped by its practitioners.

Materials from DYNA Fall 2025 are being uploaded to the event webpage as they become available.

That changed in March, when Andrea oversaw moving the Copenhagen branch of Dyalog Ltd from an office hotel into a three-room office of their own. Handling the move was one of her first big tasks, apart from co-organising our internal company meetings. Now everyone in Copenhagen has room to work, and there’s even room for visitors! There are, of course, also 401 small ducks and four regular sized ducks.

That changed in March, when Andrea oversaw moving the Copenhagen branch of Dyalog Ltd from an office hotel into a three-room office of their own. Handling the move was one of her first big tasks, apart from co-organising our internal company meetings. Now everyone in Copenhagen has room to work, and there’s even room for visitors! There are, of course, also 401 small ducks and four regular sized ducks.

Follow

Follow