Introduction

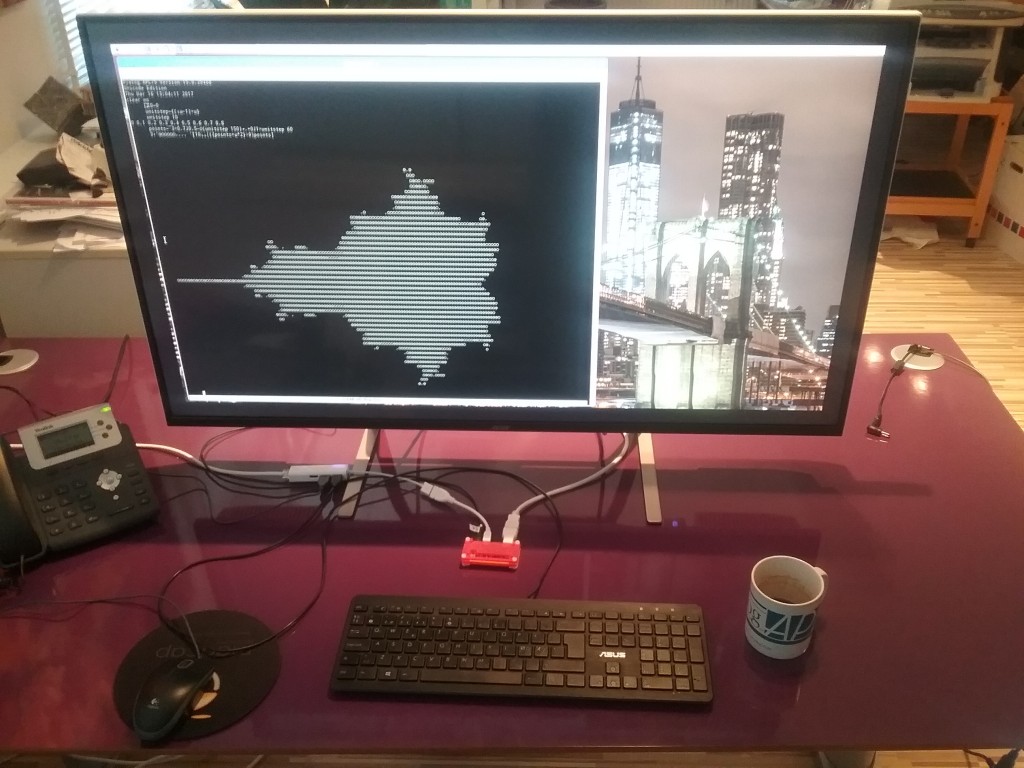

The e-mail arrived in the early afternoon from Morten, in Finland attending the FinnAPL Forest Seminar.

0 cmpx 'e←⊃∨/0.2 edges¨r g b'

6.4E¯1

edges

{⍺←0.7 ⋄ 1 1↓¯1 ¯1↓⍺<(|EdgeDetect apply ⍵)÷1⌈(+⌿÷≢),⍵}

apply

{stencil←⍺ ⋄ {+/,⍵×stencil}⌺(⍴stencil)⊢⍵}

EdgeDetect

¯1 ¯1 ¯1

¯1 8 ¯1

¯1 ¯1 ¯1

(r g b in the attached ws)

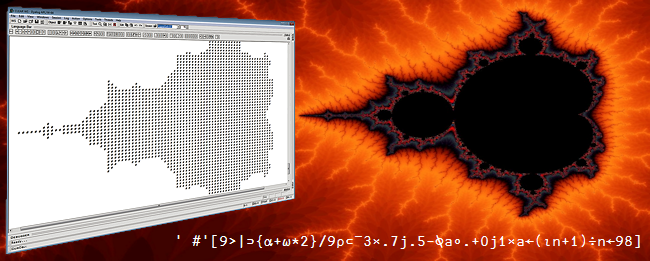

Background

⌺ is stencil, a new dyadic operator which will be available in version 16.0. In brief, f⌺s applies the operand function f to windows of size s. The window is moved over the argument centered over every possible position. The size is commonly odd and the movement commonly 1. For example:

{⊂⍵}⌺5 3⊢6 5⍴⍳30

┌───────┬────────┬────────┬────────┬───────┐

│0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│

│0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│

│0 1 2│ 1 2 3│ 2 3 4│ 3 4 5│ 4 5 0│

│0 6 7│ 6 7 8│ 7 8 9│ 8 9 10│ 9 10 0│

│0 11 12│11 12 13│12 13 14│13 14 15│14 15 0│

├───────┼────────┼────────┼────────┼───────┤

│0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│

│0 1 2│ 1 2 3│ 2 3 4│ 3 4 5│ 4 5 0│

│0 6 7│ 6 7 8│ 7 8 9│ 8 9 10│ 9 10 0│

│0 11 12│11 12 13│12 13 14│13 14 15│14 15 0│

│0 16 17│16 17 18│17 18 19│18 19 20│19 20 0│

├───────┼────────┼────────┼────────┼───────┤

│0 1 2│ 1 2 3│ 2 3 4│ 3 4 5│ 4 5 0│

│0 6 7│ 6 7 8│ 7 8 9│ 8 9 10│ 9 10 0│

│0 11 12│11 12 13│12 13 14│13 14 15│14 15 0│

│0 16 17│16 17 18│17 18 19│18 19 20│19 20 0│

│0 21 22│21 22 23│22 23 24│23 24 25│24 25 0│

├───────┼────────┼────────┼────────┼───────┤

│0 6 7│ 6 7 8│ 7 8 9│ 8 9 10│ 9 10 0│

│0 11 12│11 12 13│12 13 14│13 14 15│14 15 0│

│0 16 17│16 17 18│17 18 19│18 19 20│19 20 0│

│0 21 22│21 22 23│22 23 24│23 24 25│24 25 0│

│0 26 27│26 27 28│27 28 29│28 29 30│29 30 0│

├───────┼────────┼────────┼────────┼───────┤

│0 11 12│11 12 13│12 13 14│13 14 15│14 15 0│

│0 16 17│16 17 18│17 18 19│18 19 20│19 20 0│

│0 21 22│21 22 23│22 23 24│23 24 25│24 25 0│

│0 26 27│26 27 28│27 28 29│28 29 30│29 30 0│

│0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│

├───────┼────────┼────────┼────────┼───────┤

│0 16 17│16 17 18│17 18 19│18 19 20│19 20 0│

│0 21 22│21 22 23│22 23 24│23 24 25│24 25 0│

│0 26 27│26 27 28│27 28 29│28 29 30│29 30 0│

│0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│

│0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│ 0 0 0│

└───────┴────────┴────────┴────────┴───────┘

{+/,⍵}⌺5 3⊢6 5⍴⍳30

39 63 72 81 57

72 114 126 138 96

115 180 195 210 145

165 255 270 285 195

152 234 246 258 176

129 198 207 216 147

In addition, for matrix right arguments with movement 1, special code is provided for the following operand functions:

{∧/,⍵} {∨/,⍵} {=/,⍵} {≠/,⍵}

{⍵} {,⍵} {⊂⍵} {+/,⍵}

{+/,A×⍵} {E<+/,A×⍵}

{+/⍪A×⍤2⊢⍵} {E<+/⍪A×⍤2⊢⍵}

The comparison < can be one of < ≤ = ≠ ≥ >.

The variables r g b in the problem are integer matrices with shape 227 316 having values between 0 and 255. For present purposes we can initialize them to random values:

r←¯1+?227 316⍴256

g←¯1+?227 316⍴256

b←¯1+?227 316⍴256

Opening Moves

I had other obligations and did not attend to the problem until 7 PM. A low-hanging fruit was immediately apparent: {+/,⍵×A}⌺s is not implemented by special code but {+/,A×⍵}⌺s is. The difference in performance is significant:

edges1←{⍺←0.7 ⋄ 1 1↓¯1 ¯1↓⍺<(|EdgeDetect apply1 ⍵)÷1⌈(+⌿÷≢),⍵}

apply1←{stencil←⍺ ⋄ {+/,stencil×⍵}⌺(⍴stencil)⊢⍵}

cmpx 'e←⊃∨/0.2 edges1¨r g b' 'e←⊃∨/0.2 edges ¨r g b'

e←⊃∨/0.2 edges1¨r g b → 8.0E¯3 | 0% ⎕

e←⊃∨/0.2 edges ¨r g b → 6.1E¯1 | +7559% ⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕

I fired off an e-mail reporting this good result, then turned to other more urgent matters. An example of the good being an enemy of the better, I suppose.

When I returned to the problem at 11 PM, the smug good feelings have largely dissipated:

- Why should

{+/,A×⍵}⌺sbe so much faster than{+/,⍵×A}⌺s? That is, why is there special code for the former but not the latter? (Answer: all the special codes end with… ⍵}.) - I can not win: If the factor is small, then why isn’t it larger; if it is large, it’s only because the original code wasn’t very good.

- The large factor is because, for this problem, C (special code) is still so much faster than APL (magic function).

- If the absolute value can somehow be replaced, the operand function can then be in the form

{E<+/,A×⍵}⌺s, already implemented by special code. Alternatively,{E<|+/,A×⍵}⌺scan be implemented by new special code.

Regarding the last point, the performance improvement potentially can be:

edges2←{⍺←0.7 ⋄ 1 1↓¯1 ¯1↓(⍺ EdgeDetect)apply2 ⍵}

apply2←{threshold stencil←⍺ ⋄

{threshold<+/,stencil×⍵}⌺(⍴stencil)⊢⍵}

cmpx (⊂'e←⊃∨/0.2 edges'),¨'21 ',¨⊂'¨r g b'

e←⊃∨/0.2 edges2¨r g b → 4.8E¯3 | 0%

* e←⊃∨/0.2 edges1¨r g b → 7.5E¯3 | +57%

* e←⊃∨/0.2 edges ¨r g b → 7.4E¯1 | +15489% ⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕

The * in the result of cmpx indicates that the expressions don’t give the same results. That is expected; edges2 is not meant to give the same results but is to get a sense of the performance difference. (Here, an additional factor of 1.57.)

edges1 has the expression ⍺<matrix÷1⌈(+⌿÷≢),⍵ where ⍺ and the divisor are both scalars. Reordering the expression eliminates one matrix operation: (⍺×1⌈(+⌿÷≢),⍵)<matrix. Thus:

edges1a←{⍺←0.7 ⋄ 1 1↓¯1 ¯1↓(⍺×1⌈(+⌿÷≢),⍵)<|EdgeDetect apply1 ⍵}

cmpx (⊂'e←⊃∨/0.2 edges'),¨('1a' '1 ' ' '),¨⊂'¨r g b'

e←⊃∨/0.2 edges1a¨r g b → 6.3E¯3 | 0%

e←⊃∨/0.2 edges1 ¨r g b → 7.6E¯3 | +22%

e←⊃∨/0.2 edges ¨r g b → 6.5E¯1 | +10232% ⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕

The time was almost 1 AM. To bed.

Further Maneuvers

Fresh and promising ideas came with the morning. The following discussion applies to the operand function {+/,A×⍵}.

(0) Scalar multiple: If all the elements of A are equal, then {+/,A×⍵}⌺(⍴A)⊢r ←→ (⊃A)×{+/,⍵}⌺(⍴A)⊢r.

A←3 3⍴?17

({+/,A×⍵}⌺(⍴A)⊢r) ≡ (⊃A)×{+/,⍵}⌺(⍴A)⊢r

1

(1) Sum v inner product: {+/,⍵}⌺(⍴A)⊢r is significantly faster than {+/,A×⍵}⌺(⍴A)⊢r because the former exploits mathematical properties absent from the latter.

A←?3 3⍴17

cmpx '{+/,⍵}⌺(⍴A)⊢r' '{+/,A×⍵}⌺(⍴A)⊢r'

{+/,⍵}⌺(⍴A)⊢r → 1.4E¯4 | 0% ⎕⎕⎕

* {+/,A×⍵}⌺(⍴A)⊢r → 1.3E¯3 | +828% ⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕

(The * in the result of cmpx is expected.)

(2) Linearity: the stencil of the sum equals the sum of the stencils.

A←?3 3⍴17

B←?3 3⍴17

({+/,(A+B)×⍵}⌺(⍴A)⊢r) ≡ ({+/,A×⍵}⌺(⍴A)⊢r) + {+/,B×⍵}⌺(⍴A)⊢r

1

(3) Middle: If B is zero everywhere except the middle, then {+/,B×⍵}⌺(⍴B)⊢r ←→ mid×r where mid is the middle value.

B←(⍴A)⍴0 0 0 0 9

B

0 0 0

0 9 0

0 0 0

({+/,B×⍵}⌺(⍴B)⊢r) ≡ 9×r

1

(4) A faster solution.

A←EdgeDetect

B←(⍴A)⍴0 0 0 0 9

C←(⍴A)⍴¯1

A B C

┌────────┬─────┬────────┐

│¯1 ¯1 ¯1│0 0 0│¯1 ¯1 ¯1│

│¯1 8 ¯1│0 9 0│¯1 ¯1 ¯1│

│¯1 ¯1 ¯1│0 0 0│¯1 ¯1 ¯1│

└────────┴─────┴────────┘

A ≡ B+C

1

Whence:

({+/,A×⍵}⌺(⍴A)⊢r) ≡ ({+/,B×⍵}⌺(⍴A)⊢r) + {+/,C×⍵}⌺(⍴A)⊢r ⍝ (2)

1

({+/,A×⍵}⌺(⍴A)⊢r) ≡ ({+/,B×⍵}⌺(⍴A)⊢r) - {+/,⍵}⌺(⍴A)⊢r ⍝ (0)

1

({+/,A×⍵}⌺(⍴A)⊢r) ≡ (9×r) - {+/,⍵}⌺(⍴A)⊢r ⍝ (3)

1

Putting it all together:

edges3←{

⍺←0.7

mid←⊃EdgeDetect↓⍨⌊2÷⍨⍴EdgeDetect

1 1↓¯1 ¯1↓(⍺×1⌈(+⌿÷≢),⍵)<|(⍵×1+mid)-{+/,⍵}⌺(⍴EdgeDetect)⊢⍵

}

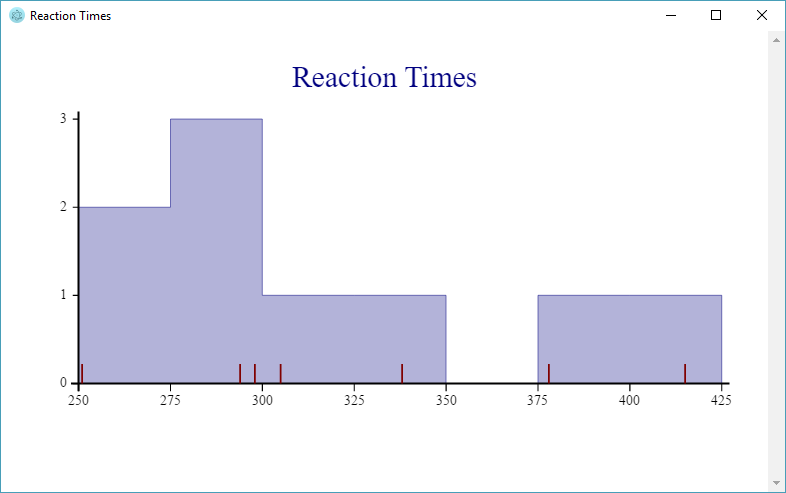

Comparing the various edges:

x←(⊂'e←⊃∨/0.2 edges'),¨('3 ' '2 ' '1a' '1 ' ' '),¨⊂'¨r g b'

cmpx x

e←⊃∨/0.2 edges3 ¨r g b → 3.4E¯3 | 0%

* e←⊃∨/0.2 edges2 ¨r g b → 4.3E¯3 | +25%

e←⊃∨/0.2 edges1a¨r g b → 6.4E¯3 | +88%

e←⊃∨/0.2 edges1 ¨r g b → 7.5E¯3 | +122%

e←⊃∨/0.2 edges ¨r g b → 6.5E¯1 | +19022% ⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕⎕

edges |

original |

edges1 |

uses {+/,stencil×⍵} instead of {+/,⍵×stencil} |

edges1a |

uses (⍺×mean,⍵)<matrix instead of ⍺<matrix÷mean,⍵ |

edges2 |

uses {threshold<+/,stencil×⍵} |

edges3 |

uses the faster {+/,⍵} instead of {+/,stencil×⍵} and other maneuvers |

Fin

I don’t know if the Finns would be impressed. The exercise has been amusing in any case.

Follow

Follow